Having the option to connect to DB2 from Azure Data Factory is a great help in migrating from more legacy database platforms to the cloud. Or to ingest these systems in your brand new, cloud based modern datawarehouse.

However, one snag I quickly came across and for which “the internet” didn’t have a vast amount of documentation is what happens when you come across an error reading what seems like a simple text field.

The most frustrating error message is the following (copying it in its entirety, to help out anyone that has a similar issue)

Failure happened on Sink side. ErrorCode=SqlBulkCopyInvalidColumnLength,Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=SQL Bulk Copy failed due to receive an invalid column length from the bcp client.,Source=Microsoft.DataTransfer.ClientLibrary,Type=System.Data.SqlClient.SqlException,Message=The service has encountered an error processing your request. Please try again. Error code 4815.Not clear what column, what the expected length was, or other tips. After some troubleshooting, I could limit it to 1 column that was defined in the destination (SQL DB) as varchar(3), and for which the data also looked like 3 characters in the source.

When reading I was using SELECT CURID FROM … and still go this error message. This must be the culprit!

Next step was to increase the size of the sink column, to see what the content looked like. Increasing to varchar(10) allowed the flow to succeed and the content was 0xD8C340 where it should be QC , 0xE2D6E4 where it should be SOU, etc…

So, this must be a conversion issue, but can I avoid it.

After long searching, I noticed that SELECT CAST(CURID AS CHAR(3) CCSID 37) as “CURID” FROM … worked, but notice you already have to add specifically a AS “CURID” or you get another error. Only this CCSID converted correctly in my case. But the major downside is that it adds 37 characters to my select, for just 1 field.

The bad news was, I had about 10 fields in each select, and about 152 selects to go, which is a lot of typing… There must be a way to address this more efficient.

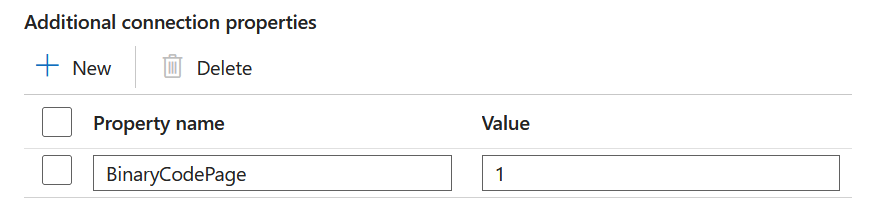

The only place where you would be able to add any configuration to this driver would have to be the linked service. And in a little corner there was indeed just this option left:

Unable to find any documentation on the DB2 driver for Data Factory site on which additional properties are available, I did manage to find what would be the solution to this problem:

Add the property BinaryCodePage, with the value 1

By default this property is set to 0, which means : do not convert

1 means convert according to the Code Page in the database

The other possible values are the number of the codepage, if you want a specific one.

After setting it to one, there was no need for the CAST function anymore, and all data got extracted as expected.