Prerequisites

The preferred way to use the PowerBI API from Azure Data Factory (ADF) is by using a Service Principal.

This has the benefit of not requiring a license assigned to the account, and working individual independent.

The service principal can be secured and access provided or removed from it.

It is best practice to store secrets in Azure Key Vault, which I will use in below article.

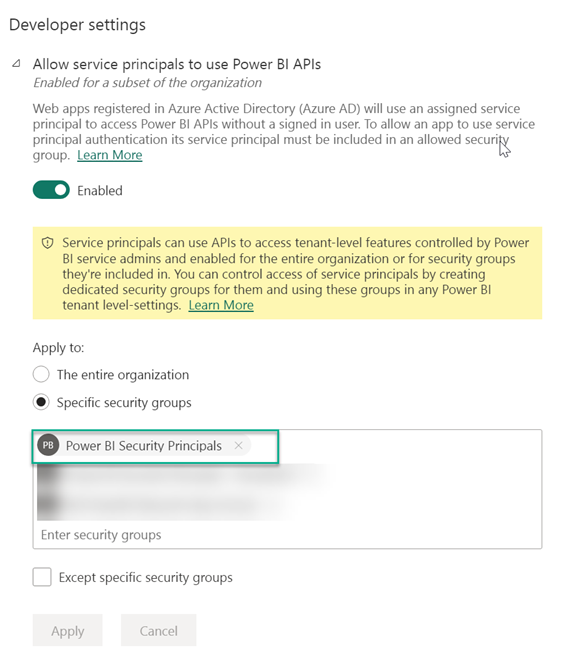

PowerBI tenant settings

Allow service principals to use PowerBI APIs

This has to be enabled (recommended to do for specific security groups).

The group holding all Service Principals that can use PowerBI APIs is “Power BI Security Principals”.

More information can be found here : Embed Power BI content in an embedded analytics application with service principal and an application secret – Power BI | Microsoft Learn

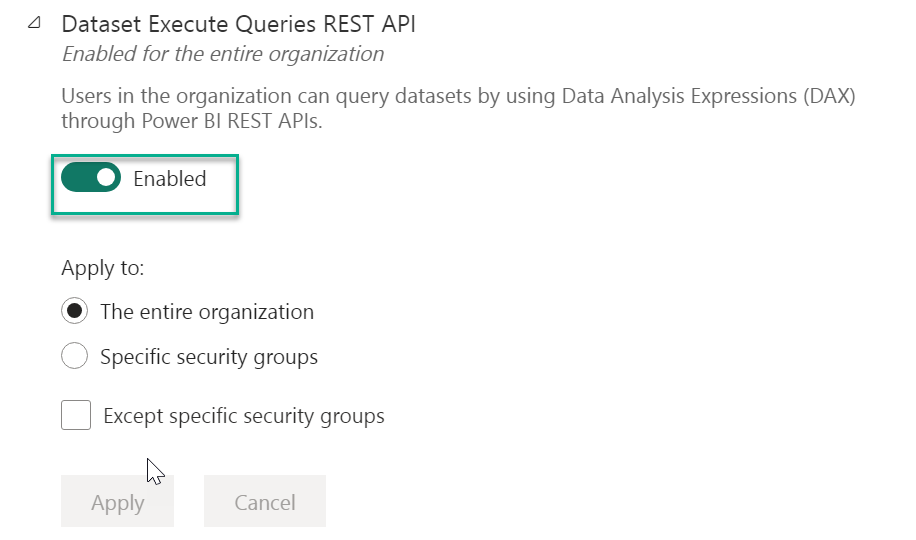

Dataset Execute Queries REST API

This is enabled by default, but a prerequisite, so added for completeness.

Service Principal

- Create an app registration in portal.azure.com. This is our service principal.

- Copy the Application ID, this will be referred to as the Service Principal ID

- Create a secret, copy the password and store in Azure Key Vault

- Add the Service Principal to the group “Power BI Security Principals” (or request this through a ticket).

That is all the configuration needed for a Service Principal. No need to add “API permissions”, they are not used with PowerBI.

PowerBI workspace

The service principal must have access to the PowerBI workspace containing the dataset you want to query/refresh.

Provide admin access to the service principal by adding him to the workspace members in PowerBI as Admin.

Checklist

- PowerBI tenant setting “Allow service principals to use Power BI APIs” enabled

- PowerBI tenant setting “Dataset Execute Queries REST API” enabled (entire org)

- Service Principal

- Store Service Principal secret in Azure Key Vault

- Service Principal in the group that is allowed to use Power BI APIs

- Service Principal is Admin in the PowerBI Workspace you want to query

No need for:

- Data Factory to have rights in the PowerBI Workspace

- Data Factory to be in the group allowed to use PowerBI APIs

- Adding API permission in the App Registration

Fundamental flow with Postman

Before diving into Azure Data Factory, let’s make sure this works from a simple client like Postman.

In Postman, you’ll have to:

- request a token for authorization

- use the token in your PowerBI REST call

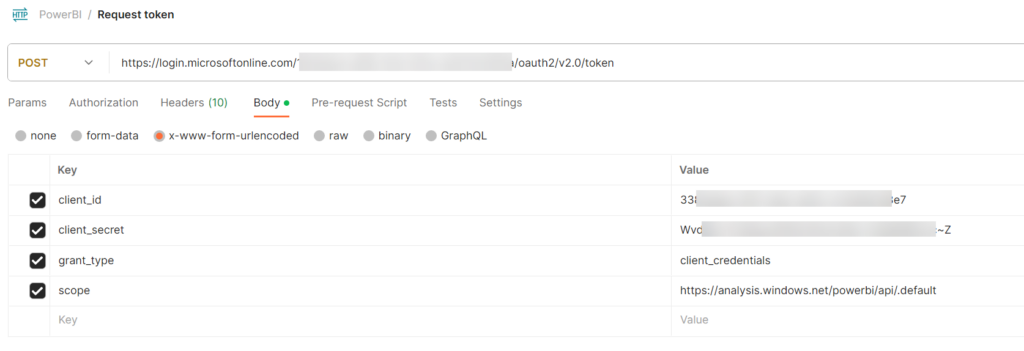

Request token

In the Header add Content-Type: application/x-www-form-urlencoded

Make sure the request is a POST to https://login.microsoftonline.com/{tenant ID}/oauth2/v2.0/token

Provide

- client_id (your service principal id = application id from app registration)

- client_secret : the secret generated in the app registration

- grant_type : client_credentials

- scope : https://analysis.windows.net/powerbi/api/.default

You can have Postman do this automatically in the Authorization section, but manually will also do.

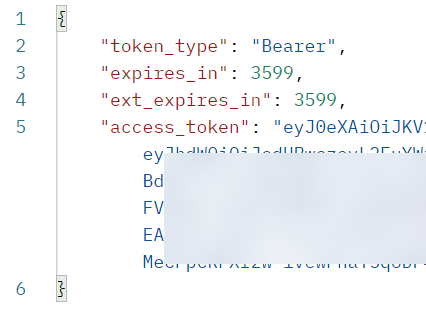

You’ll get a response with a token

Use token in PowerBI API call

In this example I’ll refresh a dataset

In the Header add Authorizaton with the value ‘Bearer’ + space + token value from access_token

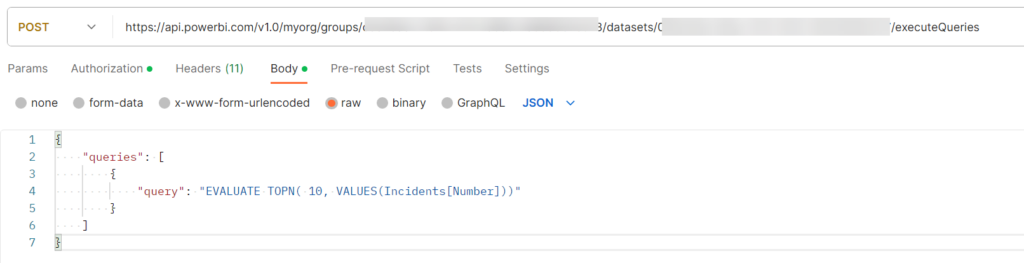

In the body provide the query, and setup the request with POST in the form “https://api.powerbi.com/v1.0/myorg/groups/{workspaceid}/datasets/{datasetid}/executeQueries”

Submit and you’ll get the results. This shows that the API is available, the security is setup correctly and basically works. Now on to doing the same but from ADF.

PowerBI API access from Data Factory

Create a pipeline with 4 parameters:

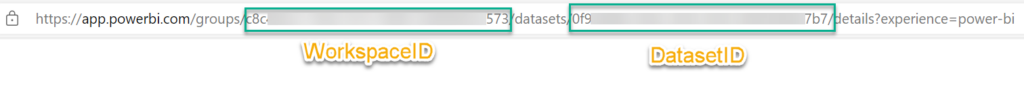

- WorkspaceID : this is the ID of the workspace your dataset is in, shown after the group in the addressbar, when opening the workspace in PowerBI

- DatasetID : this is the ID of the dataset you want to interact with, found in the address bar when opening the dataset in PowerBI

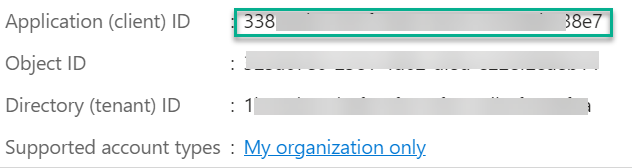

- ServicePrincipalID : this is the application ID from the app registration

This is an example, use your own app registration

- Query : e.g. EVALUATE TOPN( 50, VALUES(Incidents[Number]) )

Refresh dataset from Azure Data Factory

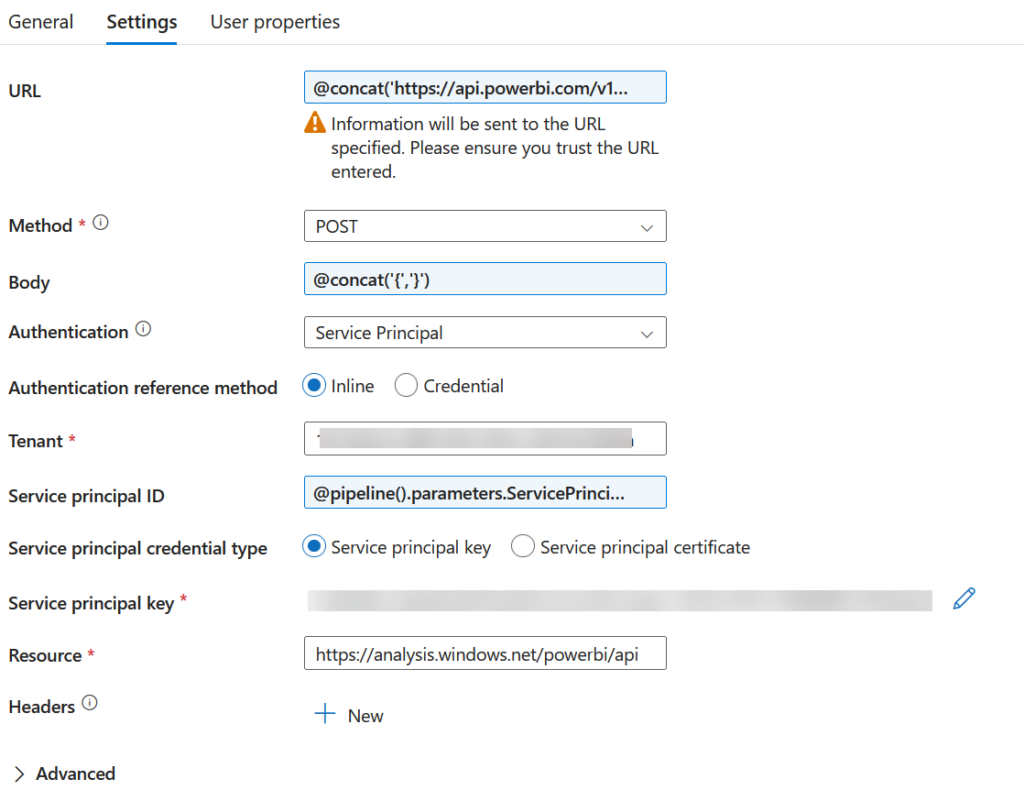

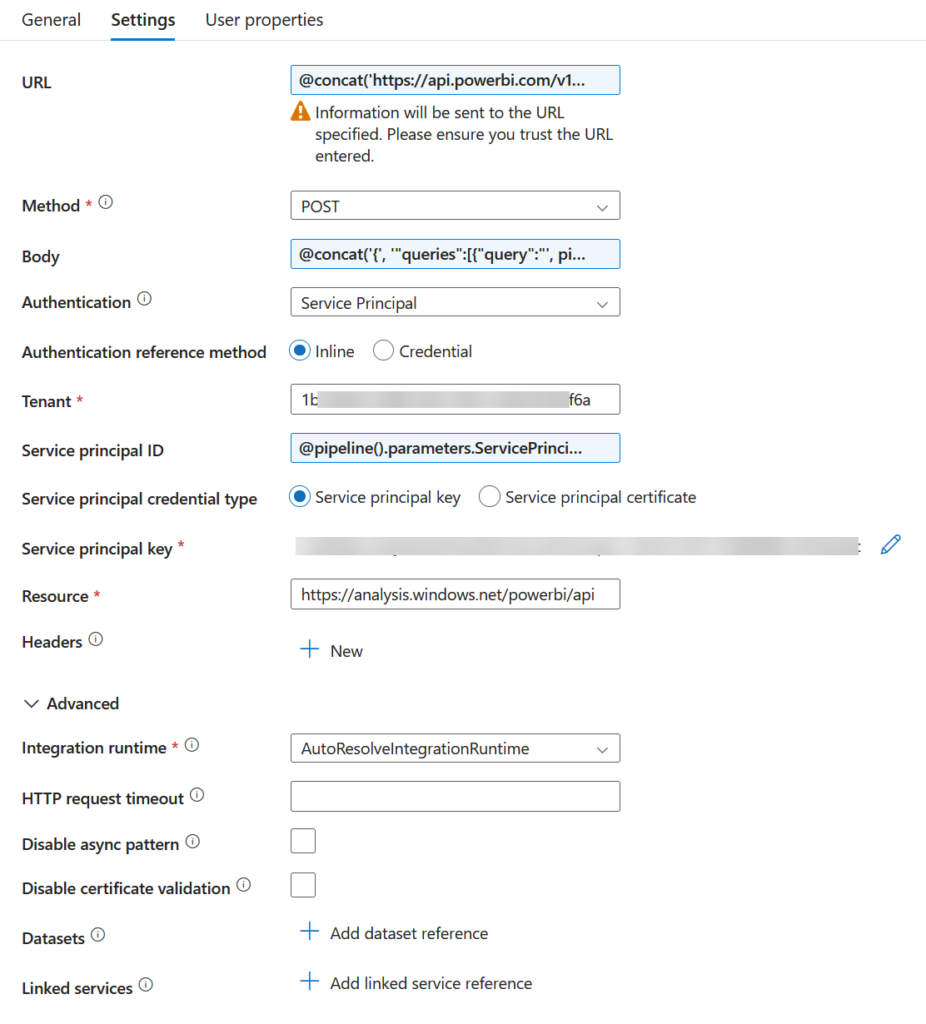

Use a Web component with following settings:

URL : this uses the parameters setup initially as best practice

@concat('https://api.powerbi.com/v1.0/myorg/groups/',

pipeline().parameters.WorkspaceId,'/datasets/',

pipeline().parameters.DataSetId,'/refreshes') Method : POST

Body :

@concat('{','}') Authentication : Service Principal

Tenant : automatically filled in, you can find it in portal.azure.com in the overview of Entra ID

Service Principal ID :

@pipeline().parameters.ServicePrincipalID Service Principal Key : retrieve from Keyvault

Resource :

https://analysis.windows.net/powerbi/api Run this pipeline with Debug and you’ll see it succeeds.

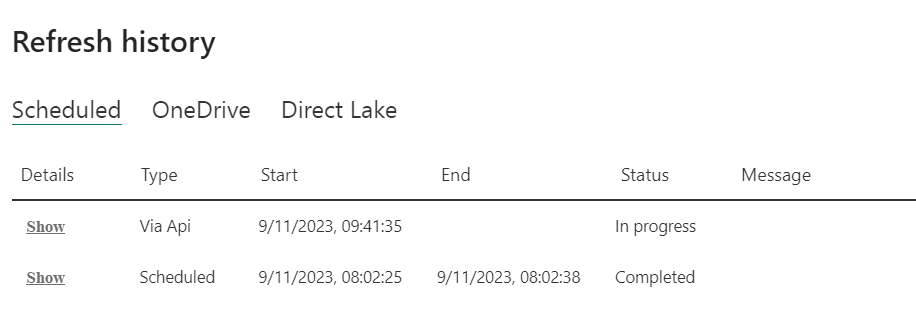

This can be confirmed with the dataset refresh history:

Note: This is just the call to start the refresh, you can build an additional loop to check on the progress of the refresh, but out of scope of this blog

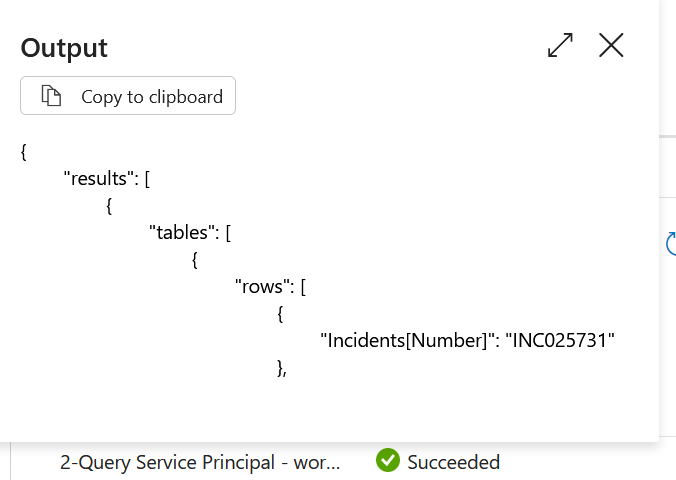

Query dataset from Azure Data Factory

First, I’ll show the setup with a Web component, which is useful to debug if the API responds correctly.

Create a web component and configure (very similar as in previous section) as follows:

URL :

@concat('https://api.powerbi.com/v1.0/myorg/groups/',

pipeline().parameters.WorkspaceId,'/datasets/',

pipeline().parameters.DataSetId,'/executeQueries') Method : POST

Body :

@concat('{',

'"queries":[{"query":"',

pipeline().parameters.Query,

'"}]',

'}') Authentication : Service Principal

Tenant : automatically filled in

Service Principal ID :

@pipeline().parameters.ServicePrincipalID Service Principal Key : retrieve from Keyvault

Resource :

https://analysis.windows.net/powerbi/api Run this pipeline with Debug and you’ll see the results in the output

Note: here you’ll encounter a 4Mb limitation, which doesn’t occur in the Copy activity

Copy data from dataset from Azure Data Factory

Add a Copy Data activity to the pipeline and configure it as follows

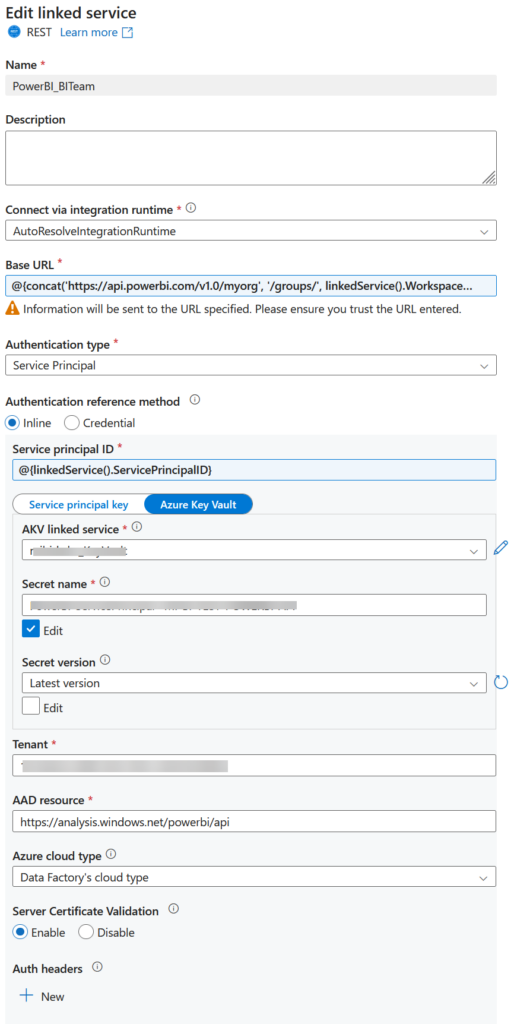

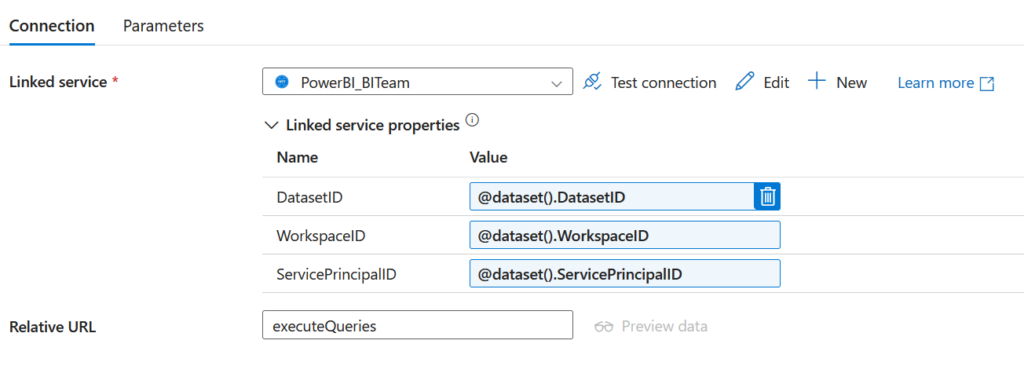

Linked Service

Setup a REST linked service with following details:

Setup 3 parameters in the linked service:

- DatasetID

- WorkspaceID

- ServicePrincipalID

Make sure to already have a linked service to Azure Key Vault (separate topic, which is out of scope of this blog).

Base URL :

@{concat('https://api.powerbi.com/v1.0/myorg',

'/groups/',

linkedService().WorkspaceID,

'/datasets/',

linkedService().DatasetID)} Authentication Type: Service Principal

Service Principal ID :

@{linkedService().ServicePrincipalID} Tenant: filled in automatically

AAD resource:

https://analysis.windows.net/powerbi/api Azure cloud type : Data Factory’s cloud type

Press test Connection and it should show Succesful

Dataset

Create a REST dataset with following configuration:

Create 3 parameters in the dataset:

- DatasetID

- WorkspaceID

- ServicePrincipalID

Select the previously created linked service

Set the values of the linked service properties as above

Add the Relative URL :

executeQueries Copy Activity

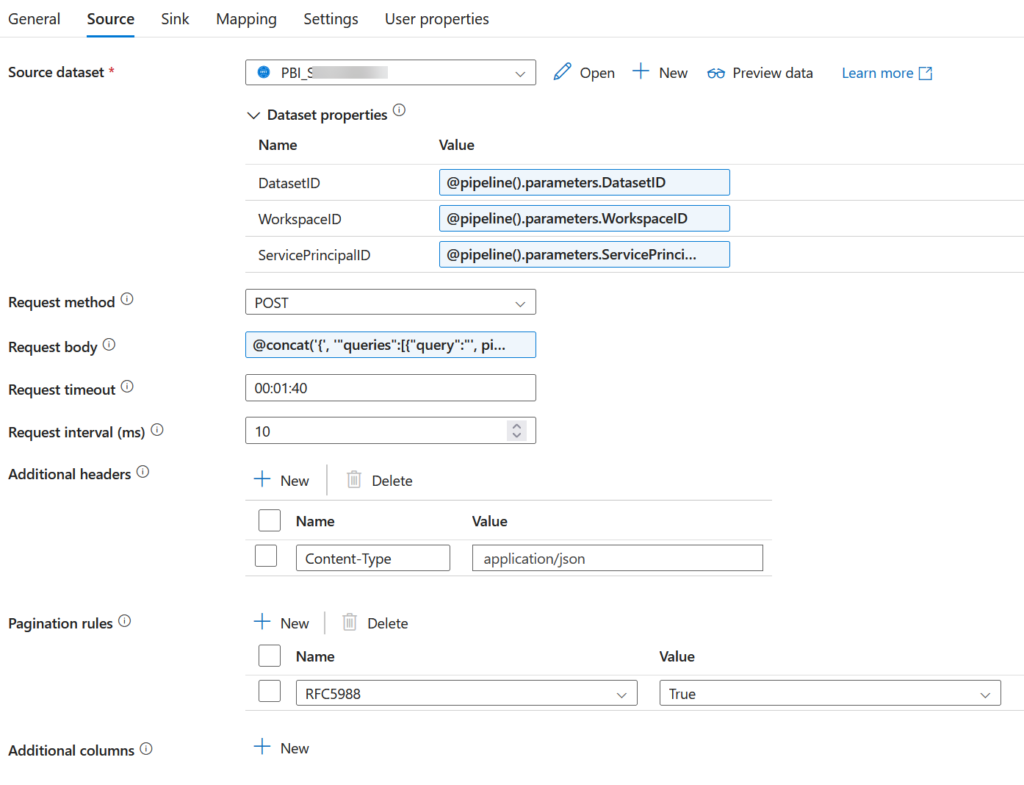

With the linked service and the dataset in place, we can now configure the Copy Activity

Source dataset : previously configured dataset

Dataset properties : as shown above, passing the pipeline parameters to the dataset

Request method: POST

Request body:

@concat('{',

'"queries":[{"query":"',

pipeline().parameters.Query,

'"}]',

'}') Additional headers: Content-Type application/json

That’s it. Configuring the Sink is very standard. You can also already do a preview of the data in the source, as well as import the Schema in mappings.

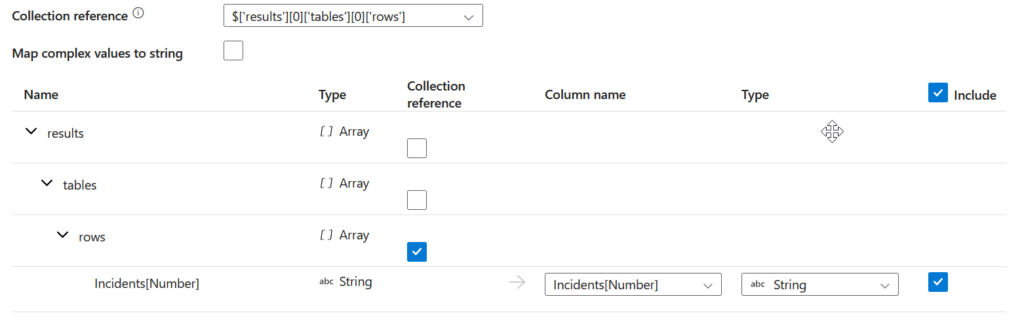

Make sure to use $[‘results’][0][‘tables’][0][‘rows’] for the Collection reference, so you can mapping the fields of the rows

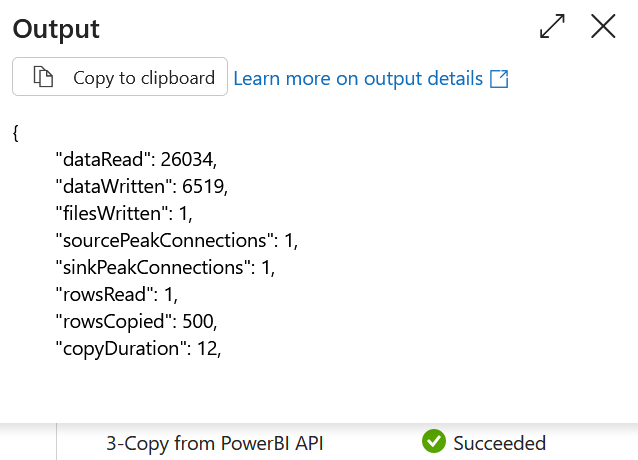

Start a Debug run and the copy fill succeed.

aran

hi, thanks for the post. it helped to make a successful request, but I am not seeing anything in response. but i see everyday dataset is refreshing successfully. could you please throw some light on why response is empty

{

“Response”: “”,

“ADFWebActivityResponseHeaders”: {

mattias

Hi Aran,

that’s correct, the response will be empty. You will just received a status 202 Accepted. It’s a “fire-and-forget” process.

https://learn.microsoft.com/en-us/rest/api/power-bi/datasets/refresh-dataset